- Web Desk

- Feb 16, 2026

OpenAI’s latest AI model: Seeing, conversing, emotion recognition, and beyond

-

- Web Desk

- May 14, 2024

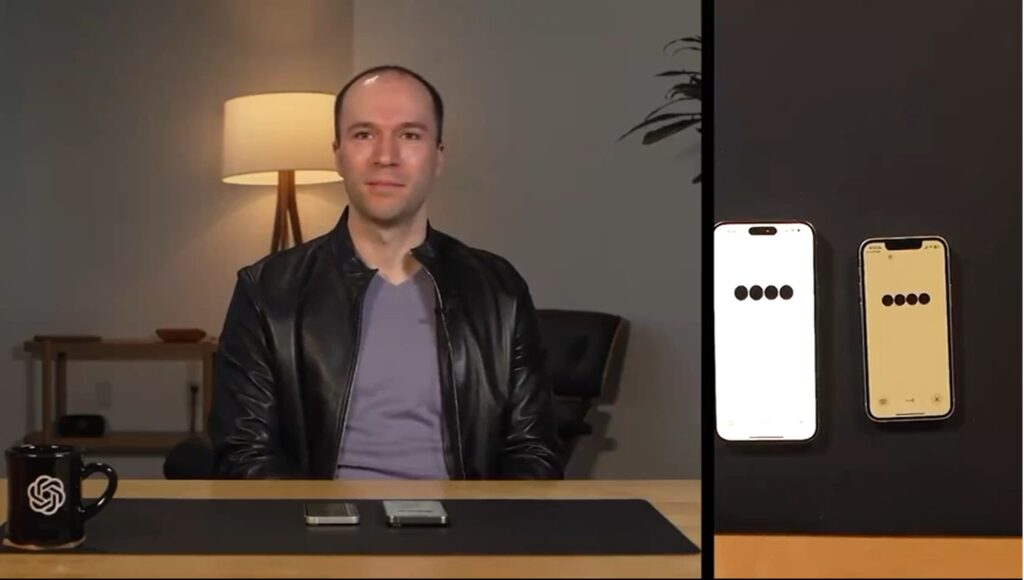

WEB DESK: OpenAI’s latest artificial intelligence (AI) model has achieved remarkable capabilities. In a recent live presentation, it engaged in conversational exchanges with another AI possessing the ability to “see” the world through a mobile device camera.

During the demonstration, the host interacted with both AIs in a relaxed and friendly manner, akin to conversing with another human. Both AIs also responded with humour and ease during the exchange.

Read more: OpenAI unveils new AI model as competition heats up

The demonstration featured two AI entities: one equipped with visual perception through the camera, while the other lacked this capability. The host prompted the two AIs to engage in dialogue. The AI with visual perception adeptly described the host’s attire. It talked about the “black leather jacket” and “light-coloured shirt” the host was wearing. It even provided observations about the room’s ambiance and lighting.

In a playful exchange, the visually impaired AI queried the perceptive AI about its observations of the environment. The latter responded with detailed descriptions, demonstrating its ability to discern nuances such as the “modern industrial feel” of the surroundings.

The AI displayed a sense of humour by noting when another person entered the scene, making jest by mimicking “bunny ears” before refocusing on the primary subject.

The latest model by OpenAI also demonstrated the ability to discern emotional intelligence by commenting on the mood of the interaction.

The host further tested the AI’s capabilities by requesting it to sing. The visually impaired AI complied, initiating a song about the host in a melodic manner. The host then issued increasingly complex directives, challenging the AI to alternate songs and fulfill intricate requests beyond simple singing.

In a collaborative effort, both AIs engaged in a musical exchange, each delivering one line of a song in turn. This demonstrated their ability to adapt and collaborate dynamically in response to constantly evolving instructions or “prompts”.

All these capabilities of the latest AI model hint at its potential to serve as a versatile “AI-companion” to a human as we see in mostly science fiction shows.

Read more: KEMU submits PC-1 for robotic surgery in Lahore

OpenAI’s latest AI model are capable of engaging in intelligent, humourous conversations, interpreting visual stimuli, including human emotions, and even demonstrating musical talent.

The latest demonstration shows how much AI has progressed, with big tech companies racing to get ahead in this field. It suggests that in the future, AI could just blend into our daily lives, making things more personal and improving how we interact with computers by understanding us better.